At the top of the learning curve for Generative AI

The mass adoption of generative AI models has yet to arrive.

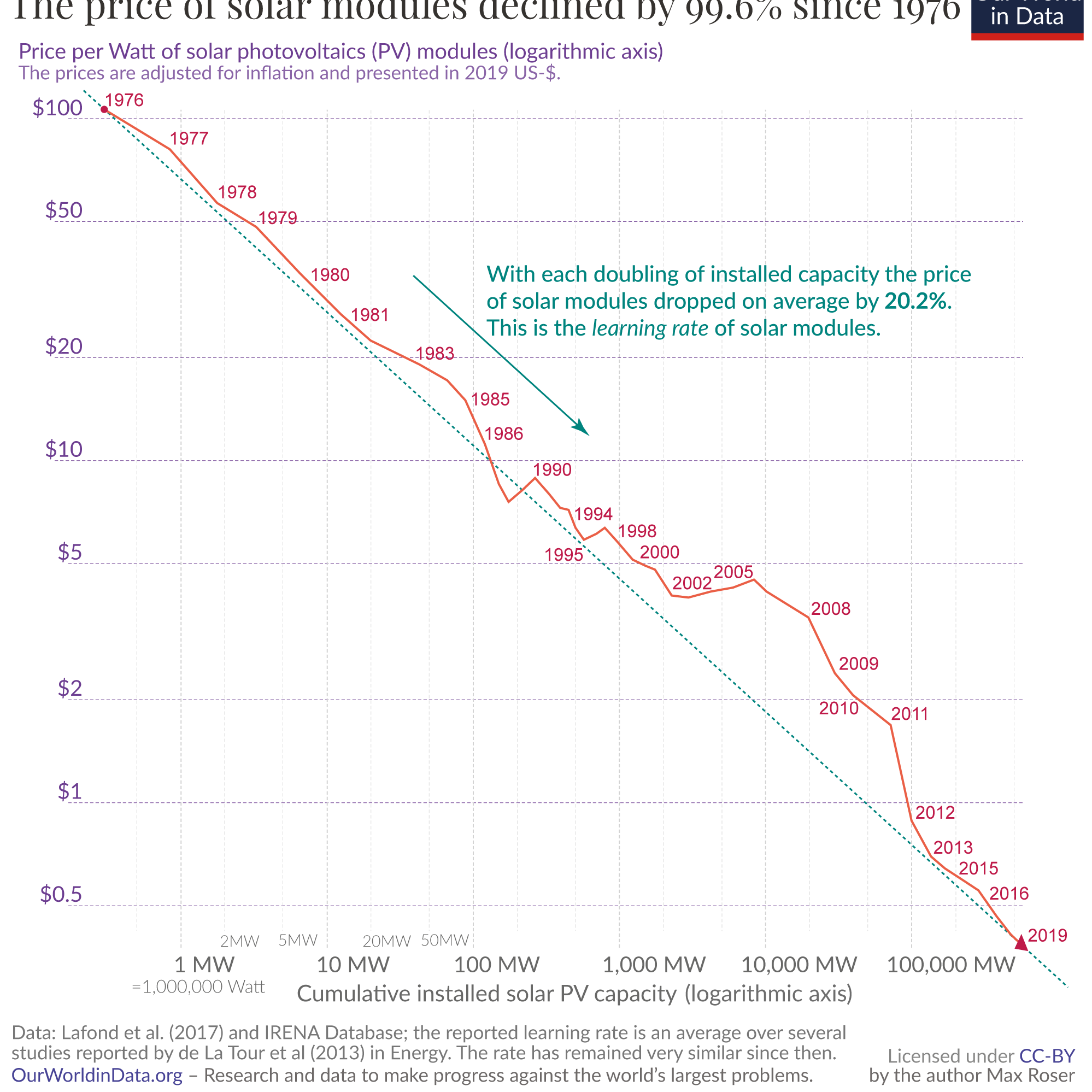

Its growth will be driven by fundamental factors as the technology starts its journey down the learning curve, lowering the cost in developing and deploying models towards every general application. In the next 3-7 years, generative AI models will be as commonplace as gradient boosting and decision tree models for prediction and classification tasks.

By way of a simple analogy about the retail industry:

Within an apparel retailer, there are 2 critical business functions: branding & marketing, supply chain & distribution. High performance supply chain operations and distribution is often buttressed by the accuracy of the firm’s demand and supply forecasts. Over-forecasting demand could easily wipe out the firm’s quarterly profits. Under-forecasting demand will greatly limit growth.

Prior to the arrival of modern sample and compute efficient ML techniques (i.e. XGBoost and random forest) for predicting time series data, leading global retailers (i.e. Nike, P&G, LLBean) crafted demand and supply forecasting models by hand. Even in the early 2000’s and 2010’s, these firms hired enormous teams of business analysts and managers to fine-tune and manage supply chain and distribution forecasts.

Yet, by the late 2010’s, a novice computer programmer can easily train an highly accurate demand/supply forecast model (based on gradient descent) with a few lines of code and a small historical dataset. These models power the modern e-commerce world (i.e. Amazon, Walmart, etc..) predicting the demand and supply of their product lines to the minute and hour.

While the large language models have disrupted the world, the underlying mechanics and intuitions have not changed. The primary difference is that transformer-based LLM’s require substantially more (1) compute, and (2) data; many orders of magnitude more of both ingredients for the model to learn as generalized models.

The barriers to development will continue to decline.

Compute supply is currently constrained. Yet, chip supply is classically cyclical (over the past 40-60 years), and it will soon enter a cycle of oversupply. There will continue to be systems engineering constraint problems to be solved; but I am hopeful that the current rate of capital investment will be sufficient to unlock these barriers (i.e. the physical practicality of a $10B datacenter vs. a $100B datacenter in terms of connectivity, power, and management).

Data will be a more multi-faceted and complex problem; there are at least 3 factors: (1) specialized/fit-for-purpose data, (2) volume of world representational data, and (3) systems engineering to ease the distribution and ingestion of data in large-scale training.

Specialized data (#1) and volume of world representational data (#2) are somewhat orthogonal to each other, but it is important to understand them together.

Specialized data (#1) is a pre-existing constraint of ML for current users and firms; nothing has fundamentally changed about whether a firm or user has high-quality proprietary data or not. If a firm has historical data relevant to its products, then it is absolutely in the best position to use that data to develop and deploy generative AI applications.

World representational data (#2) is a continuous research problem. It is known that current datasets are not exhaustive and comprehensive of our world; yet there is some fear of “running out of data”. Capital continues to flow to firms which conduct foundational research for data formats, modalities, and techniques to represent our entire world.

Yet, with the sliver of world representational data (mostly from the internet), leading research labs have already shown the tremendous potential of large models by the current releases. While there is likely some declining returns to scale in a continuous fashion, I am hopeful that there will be some step-level discontinuity improvement in this area from modalities and format research. For example, neural networks are notoriously bad at representing 3-dimensions, various tricks are utilized to navigate this problem; yet humans only experience the world in 3-dimensions. Humans learn representations with much greater sample efficiency.

Scaling up data and compute infrastructure (#3) is a classic systems problem that is being solved by firms like Databricks and leading AI labs. This is unlikely to be a primary constraint to value creation.

The accumulation of value creation from generation AI will accrue to the users and the rest of the global economy.

As these barriers decline, training and/or deploying generative AI models and applications will become commonplace. We are likely far from reaching the bottom of the learning curve for this category of technology. As a result, leading model builders today must rely on their distribution-driven economies of scale to grow the market and retain share.

Fortunately, because we are still the top of the curve and in the early innings, there is still much to do to realize this vision. So much has yet to be built, and many opportunities lie ahead.